In his much-talked-about book, Homo Deus, Historian Yuval Noah Harari is sounding a warning about what the future could hold for humanity, presenting a vision of a dystopian world that may manifest as a result of technological development.

In his dystopian world, he says, only a handful of humans, those who are rich and wealthy, will be upgraded into super-humans (Homo Deus) with the power of artificial intelligence (AI) and biotechnology, and they will exert control over—and may eventually wipe out—the remaining vast majority, those left behind not upgraded. This gives rise to the fear that we may fall behind the wave of evolution and go extinct, following the same fate as mammoths and Neanderthals: Modern Homo Sapiens drove a series of large mammals including mammoths to extinction and outlived their Neanderthal cousins in terms of natural selection.

♦ ♦ ♦

Dystopian narratives are associated with the fundamental principle of natural selection, according to which the gap between the strong and the weak never shrinks but grows until the former eventually wipes out the latter. In his science fiction novel, The Time Machine, H. G. Wells tells a story about the world some 800,000 years into the future, in which the human race has evolved into two species as a result of an extreme widening of the gap between the capitalist and working classes. What he depicted there is precisely the power of natural selection. This basic structure has been re-developed in Homo Deus.

One possible criticism of these dystopian narratives is the theory of directed technical change, which claims that the trend of widening gaps will (or may) eventually be reversed. As Massachusetts Institute of Technology (MIT) Professor Daron Acemoglu argues, changes in industrial technology occur in the direction of conserving relatively scarce factors of production and making heavy use of relatively abundant factors. For instance, in the 19th century, labor-intensive technological innovation occurred in the labor-abundant and land-scarce United Kingdom, whereas capital intensive technological innovation occurred in the labor-scarce and land-abundant United States.

It is expected that advances in AI and other technologies will make human labor unnecessary in various industries, giving rise to concern over job losses. However, when the number of people in what Professor Harari calls the "useless class" (i.e., unskilled low-wage workers) increases, technological innovation will occur in the direction of making use of them as a production factor. That is what the theory of directed technical change predicts will happen. And when that happens, demand for human labor will increase, wages will rise, and the gap will narrow.

After gaps and inequalities expanded tremendously in the 19th century, new technological innovations like the introduction of mass production occurred in the first half of the 20th century, resulting in the rise of a massive middle class, reducing wealth gaps and inequalities, and the advent of civil society. Who can deny the possibility that the same will happen in the coming years?

♦ ♦ ♦

Another counter-argument to the dystopian view of the world—one in which the strong (super-humans) wipe out the weak—involves fallibility, or the possibility of making mistakes. Presumably, advances in AI would inevitably give birth to a political philosophy based on the idea that all beings are fallible.

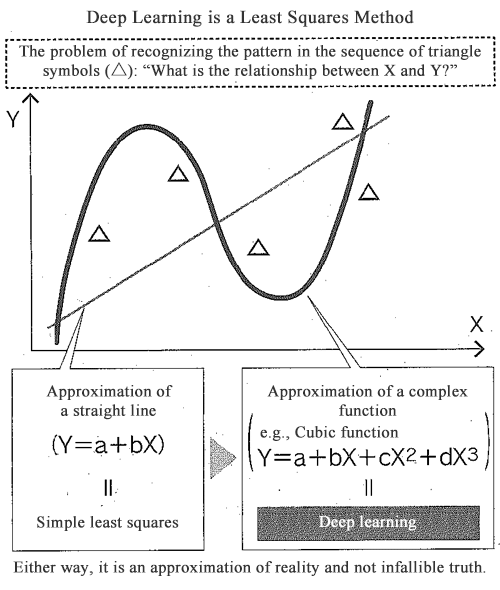

Deep learning, a key AI technology that has developed remarkably in recent years, is essentially nothing more than a simple least squares method, a type of approximate computing that minimizes errors. In addition the mechanism of human intelligence, which has long been an unfathomable mystery, is nothing but a collection of simple approximations, and the discovery of this fact is what defines the nature of AI's impact (See the Figure below).

Since intelligence in AI is an approximation, it is not infallible truth, and neither is human intelligence. Whatever intelligence created by AI or humans is an approximation of reality and fallible in the sense that it may eventually be proven wrong in the future. This is also a widely accepted idea about scientific knowledge, which existed before AI came into existence.

Wiping out others on the premise of one's own infallibility is how the mechanism of natural selection has worked in human society and biological evolution. However, evolution as we have known it to date is an "inefficient" one, which lets many forms of society (or species) stray into an evolutionary dead end and go extinct.

Society premised on the recognition of one's own fallibility should be a tolerant society that embraces diverse beings acting on free will. Those who recognize their own fallibility would recognize the possibility of being surpassed by others, in which case the rational choice is to respect the continued existence of that possibility and use their excellence, instead of wiping them out. Even if AI-augmented super-humans come into being, they would strive to maintain a diverse society and coexist with the weak and vulnerable as long as they recognize their own fallibility.

♦ ♦ ♦

In fact, such a social system is nothing new. A market system envisioned by economist Friedrich Hayek is the archetype of a social system premised on fallibility.

In his 1945 article "The Use of Knowledge in Society," Hayek argued that the wealth of implicit knowledge that exists only in a particular time and place can be aggregated only through the price mechanisms of the market. He emphasized that a society in which markets are placed under the control of a central planning agency or a dictator (constructivism) is doomed to fail. Hayek's market system is a society in which diverse beings coexist and their freedom takes the utmost priority. The reason why freedom must be given the top priority also derives from fallibility.

In a society where no one knows infallible truth, guaranteeing the right or freedom to do wrong (trial and error) is the most rational method to aggregate information that is scattered in bits and pieces, each existing in a particular time and place. One might argue that other regulatory efforts (e.g., market regulation by AI) can enable the aggregation of market information to create an efficient society. However, under the premise of fallibility, the rules themselves may be wrong, which would make the guarantee of individual freedom a better choice.

Super-humans would predict the risk of extinction, such as straying into an evolutionary dead end as a result of evolving in a definite direction, and thus seek to maintain biodiversity and the diversity within the human race. In order to realize such an economy, we must develop a new strand of liberal political philosophy that recognizes and respects the unique existential value of AI-augmented humans as well as of the vast mass of humans who are not AI-augmented, and even of other animals and plants.

However, maintaining a liberal society in the presence of new technology may be different from maintaining democracy as we know it. Preaching the significance of a market system where multitudes of people—each with unique value and information—assemble, Hayek devoted his life to preserving and fostering a liberal culture. In his later years, he expounded on ways to reform the legislature, understanding that political reform is crucial to the realization of a truly liberal society. What he had in his mind was a type of limited democracy (generational representation system). He did not expect that unlimited democracy based on a one-person-one-vote system would work.

Hayek argued that the legislature's power to legislate is not unlimited and that nomos, a universal rule of just conduct applicable to all persons, should be found in the market as the accumulated knowledge of people. The primary role of the legislature, he says, is to discover such universal rules, and the task of creating thesis, a purposive command, for distributing resources such as allocating public projects is completely different from that of discovering nomos. He proclaimed that confusion between these two distinct notions of nomos and thesis was causing modern parliamentary politics to malfunction. The point he made—i.e., that democracy, which is apt to fall into populism, must be corrected to preserve freedom—is of profound significance.

It is not a certainty that the fundamental principle of natural selection will continue to hold true as has been the case in the evolution of creatures to date. In order to prevent a bleak future from happening, we are now required to reshape democracy into a more relevant form and find a new strand of political philosophy premised on fallibility.

* Translated by RIETI.

February 18, 2019 Nihon Keizai Shimbun