How should we live our lives at present and into the near future as artificial intelligence (AI) continues to develop and spread? What is an appropriate framing for our future vision of society and what kind of public philosophy can we cultivate?

I will first touch upon the theory of strong isomorphism. It regards both the development of human intelligence and the expansion of new knowledge due to AI as evolving with a similar mechanism. It means that the development of intelligence takes place in an ever-changing universe. Under the modern scientific worldview, human reason was explained as something that seeks to understand the mechanisms of the physical world from the outside. Even under the mechanistic worldview that was prevalent around the 19th century, both the universe and humans were conceived as "ex-machina" structures. However, reason, which understands and controls the mechanisms, was thought to be outside of the physical universe. This can be compared to a situation where a physicist is observing the inside of the laboratory from the outside. However, with the arrival of AI, we are faced with the reality that even reason exists within the physical universe.

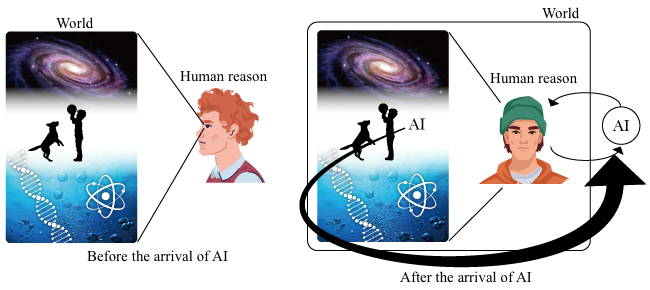

We called this new worldview, which regards the development of human intelligence (as well as the expansion of knowledge due to AI) as one of the processes of forming order that happen in the universe" the theory of strong isomorphism (see The Relativity of Intelligence: How AI will change the worldview (available in Japanese) by Nishiyama Keita, Matsuo Yutaka, and Kobayashi Keiichiro). The notion that reason looks at the physical universe from the outside, which has been an implicit premise under the modern worldview, is falling apart. Figure summarizes what is stated above.

First, the cognitive structure of the world before the arrival of AI is as described in the left panel of the figure. Humans are the pinnacle of creation, who observe the physical universe (from the outside). This is a reductionist worldview that assumes that "human reason," is something independent of the physical world, which observes and is able to control items within the universe.

As a result of the arrival of AI, which transcends human reason, the structure of the universe is as illustrated in the right panel of the figure. The physical world as perceived by human reason is a subset of the world as perceived by AI (which has greater perception abilities), and therefore, a Markov Blanket structure (to be explained later), in which what is perceived by AI exists externally and what is perceived by humans exists internally, arises as a framework for perceiving the world. AI, which is a "black box" whose internal workings are beyond human understanding, exists "outside" of human reason, and various pieces of knowledge are passed as inputs from the "outside" to human reason, without being understood. By responding to inputs from the outside, humans try to deepen their understanding of themselves and increase their chances of survival and sustainability.

Therefore, humans will be forced to adapt to the arrival of AI within this new cognitive structure.

Here, I would like to provide supplementary explanations about the theory of strong isomorphism. The theory of strong isomorphism as a "new cognitive structure" can be understood as a sort of "rational expectations equilibrium." This structure is simply one framework for perceiving the world. According to Keita Nishiyama, a Markov Blanket represents a state where there is a border between the inside and the outside and where the intensity of an output from the inside in response to an external input from outside is determined in a way that maintains internal homeostasis. The theory of strong isomorphism predicts that such a Markov Blanket structure emerges during any process of creating any type of order around the world.

We can understand this new cognitive structure under the theory of strong isomorphism as a sort of rational expectations equilibrium. First, I will provide an overview of rational expectations, which is a central concept of modern economics, and then, I will discuss the relationship between rational expectations equilibrium and the theory of strong isomorphism.

There is an undeniable perception that over the past century, economics has modeled itself on mathematics and the natural sciences (particularly physics and engineering, including optimal control theory): we cannot deny that economics has merely mimicked the methodologies of the natural sciences. However, in my view, rational expectations equilibrium is exceptional in that it is a concept that may be acclaimed as an original idea of economics, and not mimicry of the natural sciences.

Rational expectations equilibrium exactly represents the worldview described in the right panel of the figure. In other words, it is a worldview assuming that humans look at the universe from the inside (that humans themselves are aware of looking at the universe from the inside). This is a worldview that is a precursor to the cognitive structure delivered by the arrival of AI. In other words, this new cognitive structure (the right panel of the figure) may be regarded as a sort of rational expectations equilibrium as conceived by economics. This may mean that as a result of the arrival of AI, the framework of "knowledge" in general, including knowledge of the natural sciences, has conformed to economics.

Let me return to the discussion of rational expectations equilibrium in economics. Take for example a country's economic system. Humans determine how to behave (e.g., determine the amount of capital stocks held by themselves(=k)) based on expectations that they have about the state of the country's economic system (e.g., expectation that the total amount of capital stock in the country will become K tomorrow), and the results of their behavior determine the state of the economic system (K=Nk; N represents the country's population size). As humans have a prior understanding of the principle that their behavior determines the state of the economy (K=Nk), the prior expectation K should be matched by the realized state Nk. In economics, when a prior expectation K is matched by a state realized ex post K, we say that the expectation is "rational." Therefore, the state of the economic system K determined as above is called a rational expectations equilibrium.

Here, as in the right panel of the figure, a sort of self-referential loop is formed. In short, the expectation K that individuals have about the state of the economic system influences their behavior k, and the aggregate of their behavior k represents the state of the system K' (=Nk). If the expectation is rational, the prior expectation is matched by the realized state—that is, K=K'. Thus, a loop is created, with the human behavior and the state of the system K continuing to alternately determine each other.

A loop between humans and a system like this does not exist in the world of the natural sciences (except in the measurement problem of quantum mechanics). That is because the conventional natural sciences are comprised of the cognitive structure described in the left panel of the figure. In other words, in the world of the conventional natural sciences, when humans (observers) observe the system, they do so from outside of it without influencing it.

There could be various states of rational expectations equilibrium. For example, if individuals have the expectation A, the economic system arrives at the state of rational expectations equilibrium A, while if they have the expectation B, the economic system arrives at the state of rational expectations equilibrium B, as their behavior is different compared with that under the expectation A. A bank run is a case in point. If individuals expect that a certain bank has no risk of failure, depositors will continue to keep their deposits at the bank, with the result that the bank will actually remain in business. Conversely, if the expectation that a certain bank will fail spreads, depositors will scramble to withdraw deposits from the bank en masse, with the result that the bank will become insolvent and fail even if its financial condition would have otherwise been sound.

Despite the above, it is important to understand that even if the cognitive structure under the theory of strong isomorphism is a sort of rational expectations equilibrium, it may not be the only cognitive system.

That means that there are the following reservations with respect to the theory of strong isomorphism. The fundamental structure of a human as a living organism is a Markov Blanket structure, and as a result, in the eyes of humans, whose perception of the world is geared toward achieving the objective of their own survival, the Markov Blanket structure results in a principle upon which the world order is based. This cognitive structure (expectation) is justified as a rational expectations because of the human characteristic explained above related to a Markov Blanket structure and the survival of such humans. In other words, if there are cognitive entities that do not experience a Markov Blanket structure (non-human entities), those entities may experience an order other than a Markov Blanket structure.

The cognitive structure under the theory of strong isomorphism is merely one possible outlook on the world that may be adopted for the purpose of achieving the survival, prosperity and sustainability of humans—as particular cognitive entities—, and we do not rule out the possibility that there may be an entirely different outlook on the world. The theory of strong isomorphism is a cognitive framework wherein humans look at AI as something that is beyond human reason (for the purpose of their own survival and sustainability).