Technological innovation is a driving force for economic growth and social changes, and in particular, disruptive innovation has the potential to drastically change the existing industrial structures and market environments. From the perspective of formulating policies and industrial strategies, methods of discovering such technologies earlier and assessing their impact objectively is a significant challenge. In the field of meta-science, qualitative assessment is conducted conventionally, but research on quantitative assessment approaches through such means as big data analysis are also progressing. Here, the author will introduce approaches developed in recent years and will analyse studies undertaken in Japan using those approaches.

1. Which studies changed the flow of the science (Disruption Index)

The Disruption Index (D-Index) is an index which indicates the impact of a certain research paper on the citation network after its publication, that is, whether the paper is disruptive ("gives birth to a new trend") or developmental ("deepens existing trends"). This index was developed by a study team of the University of Chicago in the United States, and others in 2019 and was published in Nature (Wu et al., 2019).

The D-Index is calculated as follows by categorizing research papers published after the publication of the focal research paper into three types:

- A research paper that cites the focal paper but does not cite its references (Nonly)

- A research paper that cites both the focal paper and its references (Nboth)

- A research paper that does not cite the focal paper but cites its references (Nrefonly)

When evaluating existing research based on D-Index scores, research papers that created new paradigms in academic circles and research papers that significantly changed the conventional frameworks are extracted. The D-Index for completely disruptive research papers is 1 and that for completely developmental research papers is -1.

In the latest paper by Lin et al. (2025) that analysed a total of 49 million research papers published between 1800 and 2024, a comparison between expert interviews and D-Index scores was conducted. Papers that experts evaluated as the most disruptive in the world were a paper on the elucidation of molecular structure of DNA by Watson & Crick (D=0.96), a paper on fractals by Mandelbrot (D=0.95), and a paper on deterministic nonperiodic flow (butterfly effect) by Lorenz (D=0.81), all of which show high D-Index scores. On the contrary, a paper on the non-cooperative game theory by Nash (Nash equilibrium), for example, shows a relatively low D-Index score (D=0.28). This paper by Nash developed the content of a previous paper on a game theory by John von Neumann et al., which had been published seven years before, and seems to be considered to have been developmental.

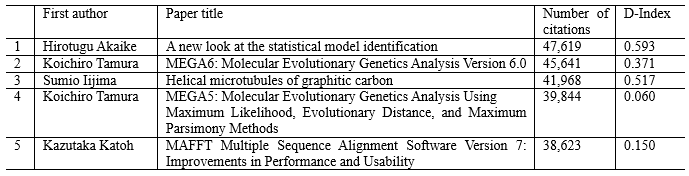

Let us move on to the evaluation of Japanese research based on the D-Index. Using the open data published by Harvard University this February (Li et al., 2025), the author ranked the top five research papers based on the comparison with the number of citations, an approach often used for evaluating research papers.

The most cited paper is the Akaike's Information Criterion (AIC) by the late Hirotsugu Akaike, which has been cited 48,000 times. What is interesting is that two types of MEGA series, software for creating developmental phylogenetic trees, by Prof. Koichiro Tamura (Tokyo Metropolitan University) et al. are ranked in the top 5, but their D-Index scores are rather low (0.371 for MEGA6 that is ranked second and 0.06 for MEGA5 that is ranked fourth). This reflects the fact that these papers represent developments based on previous research.

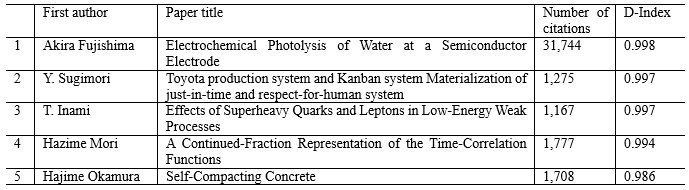

On the other hand, in terms of the D-Index, a paper on photocatalysts by Mr. FUJISHIMA Akira is the top ranked paper with a high score of 0.998. Following this are the "Toyota production system and Kanban system" presented at an academic conference by Mr. SUGIMORI et al. of Toyota Motor Corporation and a paper on quarks and leptons by Prof. INAMI Takeo (Chuo University) et al. These two papers show extremely high D-Index scores, although their numbers of citations were smaller by a factor of 10. They can be evaluated as studies that present new concepts.

2. What is an unprecedented idea? (Determining Novelty)

The biggest weakness of using the D-Index is that only past studies can be evaluated because evaluations are conducted using subsequent research papers. There are no subsequent papers for newly published research papers and their D-Index scores cannot be calculated. This problem is solved through the calculation of novelty by comparing papers with preceding research. The major approaches are briefly introduced below (the classification is based on Iori et al. (2025)).

- -Categories’ first occurrence: How many new pairs are included that are not included in past literature data in a combination of cited documents, journals, or fields (Wang et al., 2017)

- -Categories’ distance: How unique is the combination of cited documents, journals, or fields compared with the past literature data (Uzzi et al., 2013; Lee et al., 2015)

- -Text first occurrence: How many pairs are included in the combination of words in an abstract of a research paper that are not included in past literature data (Wang et al., 2017)

- -Text distance: How unique is the combination of words included in an abstract of a research paper compared with past literature data (an average pair or the most unique pair) (Shibayama et al., 2021)

3. Developing new technology policy tools

The use of any of the quantitative assessment approaches introduced here may allow for the objective extraction of disruptive technologies and innovative technologies from literature data. Their use will allow policymakers to appropriately discuss which fields should receive intensive support and identify areas of risk.

As a practical application, these approaches have come to be utilized by national governments in setting their priority areas and analysing risks. In the United Kingdom, an initiative titled the "Metascience Novelty Indicators Challenge," will be hosted by the UK Research and Innovation (UKRI) this autumn. This is a high-visibility initiative aimed at seeking ideas on new indicators for identifying novelty in research broadly from the general public, with an award of 300,000 pounds (approx. 60 million yen). The initiative is co-hosted by Elsevier, which operates the research article database, RAND Europe, and the University of Sussex, and winners can also receive support from these co-hosts.

On the other hand, it is important to recognize the limitations of quantitative indicators in order to avoid an over-reliance on them. New technologies cannot be evaluated with the D-Index, and novelty levels may vary depending on how fields and words are selected. Whether advanced technology trends can be captured by publication of research papers in the first place also bears examination. Therefore, it is necessary to appropriately combine qualitative assessments and quantitative indicators in actual operation. How can we rapidly ascertain the disruptiveness and novelty of new technologies and utilize them in policies? We need to continue increasing our knowledge of meta-science and promoting its application and verification both domestically and internationally.

July 9, 2025

>> Original text in Japanese