Difficulty of collecting data for policy evaluation

When I was working on my doctoral thesis from 2008 through 2011, collecting data for analysis was one of the toughest tasks. My thesis was on the effectiveness of public policies to support startups, and I analyzed the effects of the Organization for Small & Medium Enterprises and Regional Innovation (SME Support, JAPAN)'s funding program designed to provide equity finance to startups (hereinafter "Startup Fund Program").

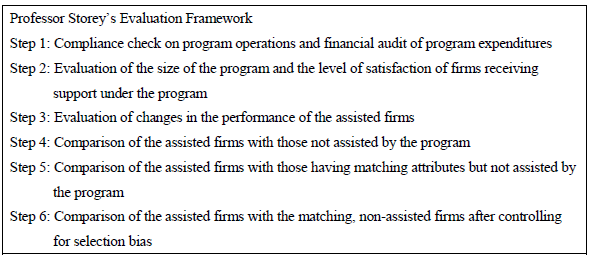

In undertaking this analysis, I referred to the "Six Steps to Heaven" evaluation framework (Figure 1) (Note 1) developed by Professor David Storey, an expert advisor to the Organisation for Economic Co-operation and Development (OECD) on policy evaluation (The six steps remain a model approach for evaluating public policies for SMEs at the OECD).

Back then, Harvard University Professor Josh Lerner's research on the U.S. government's Small Business Innovation Research (SBIR) program (Note 2) was attracting much attention, and he used Step 5, i.e., the second most sophisticated evaluation approach in this analysis. Thus, I made it my goal to conduct my analysis at a comparable level of sophistication. This means that I had to collect data on firms assisted by the Startup Fund Program, create a control group consisting of firms having matching attributes (size, industry, etc.) but not assisted by the program, and examine how sales, the number of employees, etc. of the assisted firms changed under the program compared with those of the control group.

However, before too long, I encountered a big problem. Collecting data on control group firms was not easy. Firms engaging in startups—which are laden with growth potential and by nature fraught with uncertainties (Note 3)—vary significantly in the pace of their growth. Those assisted by the Startup Fund Program were at varying stages of development, and it was difficult to find their matching firms.

Eventually, I turned to Teikoku Databank's Corporate Profile Database (COSMOS2) (Note 4) and, with much difficulty, identified 233 firms having attributes similar to those of 161 assisted firms but not assisted by the program. A comparison of those two groups found that those in the latter group had increased their sales and the number of employees. Also, by performing a multiple linear regression analysis, I was able to confirm that the productivity of assisted firms had increased significantly. Thus, I managed to complete my thesis, but I had to devote a significant portion of the time spent on this research to scrambling for data.

Encounter with a new analysis technique

Five years later, I am now engaging in the task of supporting startups at the government, and in the course of performing my duties, Stanford University Professor Takeo Hoshi introduced to me to a new analysis technique.

He advised me to read a paper (Note 5) written by Sabrina Howell, who studied under Professor Lerner. The paper analyzes the effects of the SBIR program on new ventures in the energy sector, using a new technique, the regression discontinuity (RD) approach.

As aforementioned, the conventional approach to the measurement of policy effects has been to create a matching group of firms with attributes similar to those of policy-assisted firms but that are not taking advantage of the policy and compare the two groups to identify the differences. However, this approach has its shortcomings. A great deal of time and energy must be devoted to collecting and developing data. It is incapable of controlling for the bias between firms willing to follow or take advantage of public policies and those who are not. It is also impossible to evaluate the possibility that policymakers might have selected firms that would have been successful even in the absence of the policy (winner picking).

The RD approach considers a policy program under which firms' eligibility for support is determined based on objective measures such as scores given by a panel of judges. It estimates the effectiveness of the program by collecting data on the performance (sales, employment, etc.) of those firms selected or not selected by a bare margin and comparing changes in their performance before and after the inception of the program.

Since data analyzed in this approach are limited to those on the firms whose scores are close to the eligibility threshold, the volume of data required for analysis is much smaller. It is also free from the bias due to differences in firms' willingness to take advantage of public policies because all of the firms included in the two groups—i.e., selected and non-selected groups—are applicants for and thus willing to take advantage of the program. Furthermore, examining the relationship between scores and performance allows us to determine whether the observed results have been influenced by policymakers' winner picking behavior.

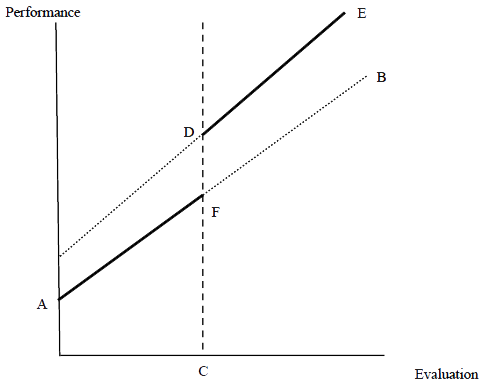

Figure 2 illustrates the basic idea of the RD approach. The horizontal axis measures firms' scores at the time of screening for eligibility, and the vertical axis represents their performance after a certain period. The vertical line C is the eligibility threshold dividing selected and non-selected firms. The line AB denotes the relationship between scores and performance in the absence of any policy interventions, assuming that firms with higher scores at the time of screening will also excel in later performance. Here, the introduction of an effective policy program would shift the performance line for selected firms from FB to DE. The RD approach is to examine whether this shift has occurred or not.

Wasting no time, I requested help from Professor Hoshi and Professor Tetsuji Okazaki of the University of Tokyo's Graduate School of Economics to work together on a policy evaluation project using the RD approach. Specifically, we are examining the effectiveness of the Jump Start Nippon, a startup support program implemented by the Ministry of Economy, Trade and Industry (METI)'s New Business Policy Office by securing funds in supplementary budgets. As the volume of data required was minimum, we could start analyzing them quickly. We have been making good progress and will be announcing our findings soon.

Policy evaluation and evidence-based policymaking from the viewpoint of practitioners

Today, evidence-based policymaking is attracting significant attention, giving the renewed importance to policy evaluation on a program-by-program basis. Evaluation techniques have evolved over the years. For instance, a randomized controlled trial (RCT), used in the form of A/B testing to analyze consumer behavior in the business world, has been employed to measure for the precise measurement of policy effects, and the RD approach discussed in this article has been used increasingly (Note 6). We should make active use of these new analytical techniques to evaluate government policies in practice.

To achieve that end, we must first change the existing policy system to incorporate data collection and other evaluation processes into its design. For instance, in order to analyze a support program for startups using the RD approach, it is crucial to collect data on sales, the number of employees, fundraising, and so forth before and some of the implementation of the program for two groups of companies, those assisted by the program and those not. Thus, it is important to build in a mechanism to collect necessary data beforehand, for instance, requesting applicants for a program to cooperate to surveys by including a statement to that effect in application guidelines. Considering the need to collect data for subsequent evaluation at the time of designing a policy program and ensuring appropriate evaluation based on appropriate data will improve the efficiency of policy evaluation. When more findings from policy evaluation and analysis become available, it will become possible to compare the effects of different policy programs, which in turn will contribute to the embedding of evidence-based policymaking.

Lastly, I would like to cite two challenges for evidence-based policymaking in Japan as seen from the viewpoint of practitioners, putting aside the possibility of overcoming them.

The first challenge is the continuation of policy programs. In his book, Boulevard of Broken Dreams: Why Public Efforts to Boost Entrepreneurship and Venture Capital Have Failed—and What to Do About It (Note 7), Professor Lerner points to the need to allow sufficient lead time. Indeed, it often takes several years for a policy measure to take root. In particular, in the case of policy programs designed to create an ecosystem for fostering human resource development or new industries, it may be several decades before they start delivering intended results. Short-lived policy programs are problematic from the viewpoint of analysis because they disallow and fail to provide sufficient chronological analysis.

However, the reality in Japan is that policy programs are rather short-lived, typically, replaced by other programs in three to five years. Some of those financed by supplementary budgets last for only one year. Continuing policy programs for the sake of enabling their evaluation is like putting the cart before the horse. Still, I believe that we should take this opportunity to correct the excessive short-termism in government policies observed in recent years, as evidence-based policymaking gathers momentum and the relationships between the continuation of policy programs and their effects become evident.

The second challenge is the reflection of policy evaluation results. Under the current system, which calls for screening budget proposals and reviewing public projects, we tend to fail to utilize policy evaluation results fully. Although public projects and policy programs are subject to yearly review, it seems as if cutting and/or eliminating budget allocations were the sole purpose of such review. I have never heard an episode of any project highly evaluated and thus awarded a significant increase in budget. It is important to eliminate wasteful spending. However, it is also necessary to expand and/or maintain over a long time those policy measures proven effective. Shifting from the demerit system to the merit system to provide an incentive by properly rewarding good policies would prompt practitioners to engage in policy evaluation proactively.

As discussion on evidence-based policymaking deepens, I expect to see the full implementation of policy evaluation utilizing new analytical techniques. When that happens, new policy programs will be formulated based on the evaluation of past ones, enabling the government to deliver greater effects of its initiatives. As a policy program practitioner, I would like to engage in proper evaluation of programs in which I was involved.