Policy evaluation and pseudo-scientific lies

I have been with the Research Institute of Economy, Trade and Industry (RIETI) for 15 years. Because of the position I hold, I am asked often by relevant government officials to conduct a third-party review or appraisal of policy evaluations carried out by universities and various research organizations including think tanks and consulting firms.

There has been a growing awareness of the importance of quantitative policy evaluation in recent years. However, contrary to what this might suggest, my personal impression is that policy evaluations based on scientific evidence are, quite often, incredibly inappropriate (whether or not with ill intent). I find it very unfortunate that much of it—whether documents or reports—must be read with heavy caution.

This is not a problem unique to Japan. Manski (2011)* reports that the United States is also facing a similar problem and things are getting serious, citing a series of specific examples with real names. Although I am not aware of any specific cases in Europe, transitional economies, or developing economies such as China and India, chances are that the situation is very much the same in those countries.

In this article, I draw on some examples of pseudo-scientific lies I have seen or heard in this area of research to explain how inappropriate quantitative policy evaluations are generated, what are typical tricks employed in such practices, and how to detect them. I will try to keep the language as plain as possible so that government policymakers can better understand and put into practice what they read.

Although I welcome any criticism or personal attacks about this article or myself, information on specific cases of which I am unaware and requests for advice on instances that are unusual by any standard would be most welcome.

Background and factors behind the production of pseudo-scientific lies

Background and factors behind inappropriate policy evaluations based on seemingly scientific evidence can be classified into two types, namely, structural factors and technical factors. The former can be defined as organizational or systemic problems of parties contracting out policy evaluation work (i.e., government agencies) and those undertaking the work (i.e., think tanks and universities), whereas the latter can be defined as technical problems regarding the competence and qualification of individuals or units undertaking analysis for policy evaluation within research organizations (e.g., think tanks) or government agencies, including the validity and ethicality of such competence and qualification (Table 1).

| (1) Structural Background: Factors contributing to the propensity to resort to policy evaluation with predetermined results |

|---|

|

| (2) Technical Background: Factors contributing to the fabrication or derivation of convenient evaluation results |

|

Now, my esteemed readers, how many times have you heard a familiar bell ring?

One typical pattern of structural factors I have personally encountered is the combination of organizational culture that has no appreciation for courageous withdrawal on the part of government agencies as contracting parties and various conflicts of interest on the part of contracted parties undertaking analysis and evaluation. Wanting to affirm their current policies and secure budgets and staff for their implementation, government agencies see policy evaluation as a tool to justify their demand. Meanwhile, driven by monetary and/or competitive motives, analysts and evaluators respond by delivering "results" catering to the needs of their clients, an act for which they deserve the accusation of being a prostitute of learning.

Another pattern is that novelty-seeking researchers—whether those at universities or other research institutes—have gone too far in their pursuit of "surprise" and mobilize the best of analytical techniques to conduct irrelevant research, which is so off the point that colleagues become embarrassed and bewildered.

As for the technical background, there is what I call the "case of stepping on the landmine," in which an outsourcing government agency accepts the result of improper evaluation because of a lack of basic knowledge on statistical techniques. There also is the "case of falling to the dark side." This refers to a situation where analysts and evaluators give in to pressure from their client, thereby engaging in the intentional or potential selection of data and analytical techniques, and/or resorting to self-serving interpretation and reporting practices.

Although not as frequent as the above two cases, I have encountered some appalling "policy evaluations" conducted by independent organizations such as external research institutes and international agencies. In one example, a conclusion was drawn based on an unacceptably biased survey as a result of an attempt to criticize for the sake of criticism. In another, evaluators used excessive assumptions and yet failed to address deviations from or contradictions among them, ending up with inconclusive evaluations.

Thus, unfortunately, there always exist deeply rooted motives for producing pseudo-scientific lies in policy evaluation in all fields, organizations, and time.

"Tricks" employed to forge pseudo-scientific lies

Some of the factors discussed above, such as organization-related factors, are outside my area of expertise. However, technical and certain other factors have been my (unintended) research subject for many years. Thus, in what follows, I would like to provide an overview of my research and explain, in an easy-to-understand manner, typical tricks used to forge pseudo-scientific lies as seen from the dark side. Regarding problems with statistics per se, I would advise readers to read How to Lie with Statistics, a famous book.

(Intentional selection and blending of statistical data sources and sample years)

Panel data analysis using microdata obtained from statistical surveys has been very much in fashion in recent years. However, panel data analysis has a unique characteristic that is "convenient" and troublesome. Specifically, it allows researchers to derive completely different results by arbitrarily selecting targets and years subject to analysis. They can also produce very "spicy" results by aggregating microdata as appropriate for their needs and implicitly readjusting weights assigned to data, or by removing outliers in an arbitrary manner.

(Exploration using data from a biased sample, such as those not properly representing the population and those containing outliers)

In econometrics, randomized experiments are highly regarded as a tool to provide credible evidence. However, even when the randomized data used for research are obtained from a sample that is not fully verifiable, as may be the case where the entire research work is outsourced to an external research firm, it is still counted as a randomized experiment. In other words, randomization does not guarantee the validity and adequacy of the sample selected. It is an old trick to interview randomly selected drunkards in front of JR Shimbashi Station or conduct a public opinion survey by calling randomly selected home phone numbers, and present them—i.e., Shimbashi drunkards and those staying at home during weekday daytime—as a representative sample of Japanese people.

(Disregard or misapplication of external factors that occurred in sample years or might have affected targets for analysis)

Ceteris paribus, or holding other things constant, is a fundamental prerequisite to the proper evaluation of treatment effects such as those of policy interventions. Accordingly, for those contriving to misapply external, non-treatment factors that occurred incidentally or the result of endogenous selection (such as Aschenfelter's dip*) to produce effects that do not exist, before-after analysis without any control group and time series analysis in which relevant explanatory variables are omitted are very convenient. It is generally well known that correlation does not imply causation. However, it is very often forgotten that causation cannot be evaluated accurately unless external factors and secondary effects are properly controlled for and taken into consideration.

(Abuse of or deviation from assumptions in analysis and/or disregard of basic theories)

For any analytical technique, there are some assumptions that must be fulfilled as a prerequisite to its application. In the case of evaluating treatment effects, it is usually questioned whether pre-treatment heterogeneity between treated and control groups has been properly taken into account, with a particular focus on whether the conditional mean independence (CMI) assumption holds with respect to selection between the two groups. However, outsourcing government agencies rarely check whether other assumptions hold. In particular, some government officials loathe the troublesome task of checking the non-existence of group correlation or serial correlation in error terms and some other prerequisites, because they could end up unwittingly making an accurate assessment on the effectiveness of policy measures, which would negate their efforts to falsify or overstate policy effects by underestimating the standard error.

For the same reason, making an abrupt proposal to adopt a novel evaluation technique—typically one labeled with a foreign name unheard in Japan—is an extremely effective way to blur the question of whether all of the fundamental assumptions hold.

(Underestimation and far-fetched interpretation of potential deviations and errors)

When a coefficient is significant at the critical value of a statistical test is 5%, it simply means that the null hypothesis where the coefficient is zero is rejected with a risk probability of 5% (there is a 5% possibility that the statistical test result is not true), not that a certain policy measure will deliver its intended effects at a probability of 95%. However, for whatever reason, many of those at outsourcing government agencies, who do not have sufficient knowledge on statistics, have a soft spot for this particular percentage, i.e., 95%, and they are pleased to see the phrase of "95% significance" in evaluation reports. Likewise, in cases where there exist differences between treated and control groups prior to any treatment, statistical testing produces seemingly very favorable results. Thus, regression discontinuity analysis and cross section analysis in which data on intertemporal changes are not used are highly appreciated in some quarters.

Basic steps for detecting pseudo-scientific lies

Putting things under a new light—i.e., seeing them from the perspective of government agencies as parties responsible for detecting typical tricks such as those described above—would illuminate some key points to check and steps to follow in doing so as shown below. Some readers might question the effectiveness of those measures, as they are so simple. However, let me once again emphasize that what I write in this article is based on actual cases that I have encountered first-hand in the course of working in the field of policy evaluation. I believe that taking the steps listed below can prevent a considerable portion of pseudo-scientific lies (Table 2).

Putting it the other way around, fair and right-thinking analysts and evaluators should explain the key points in the sequential order shown below in reporting analysis and revaluation results to their clients, and ensure that they present evidence to show the legitimacy and validity thereof.

| (1) Check the source of samples analyzed, sample years, sample size (number of observations), and whether or not the samples have been subjected to any preliminary data processing. |

|---|

|

| (2) Provide evidence for the representativeness of samples analyzed and the legitimacy of the preliminary data processing employed |

|

| (3) Check how observed indicators have changed over the sample period for each sample analyzed (Graphic presentation of numerical changes for treated and non-treated groups) |

|

| (4) Provide evidence for the legitimacy of analytical techniques applied, and check assumptions made |

|

| (5) Perform a sensitivity analysis by taking potential deviations and errors into account, and safety side judgement of the outcome |

|

A case example of pseudo-scientific lies: Evaluation of rumor-caused damage associated with the Fukushima Daiichi nuclear power disaster

Partly in an attempt to do my own soul-searching, I would like to share a mistake I made recently as a specific example, and explain how we can apply the above procedure for detecting pseudo-scientific lies.

We can make a quantitative evaluation of the economic damage to agricultural products from Fukushima prefecture caused by rumor associated with the Fukushima Daiichi nuclear power disaster by using their prices and volumes traded at the Tokyo Metropolitan Central Wholesale Market. There were no particular problems in the credibility of the statistical data source, preliminary data processing, etc., and I made a careful observation by presenting them in graphics. Thus, all of the conditions listed in (1) through (3) above have been met. So was the condition in (5) as I clearly defined the criteria for determining the "continuation" or "ending" of the damage. However, in performing a difference-in-differences (DID) analysis in February 2017,* I failed to pay sufficient attention to the assumptions made in the analysis, resulting in a material mistake regarding the condition in (4).

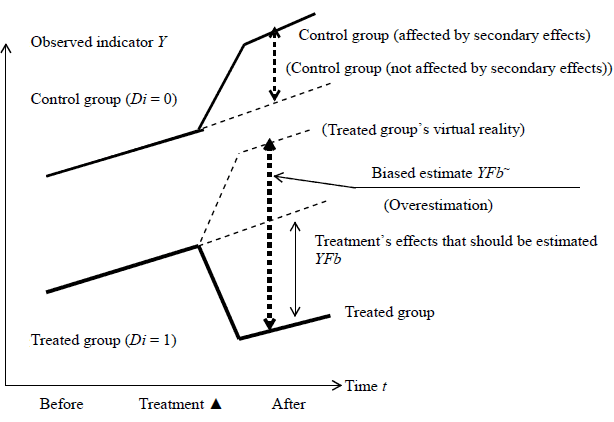

More specifically, I failed to confirm the validity of the stable unit treatment value assumption (SUTVA), one of the six assumptions of DID analysis, and was unable to find an effective way to do so. Simply put, I included—without sufficient scrutiny—agricultural products from those prefectures affected by secondary effects, such as Hokkaido and Saga, whose supply of agricultural products increased following the nuclear power accident in substitution for those from Fukushima and other prefectures suffering from harmful rumors about radioactive contamination. Hence, the fourth assumption for the DID analysis was violated, resulting in an overestimation of the magnitude of rumor-caused damage (Figure 1).

Further investigation revealed that there have been very few studies conducted on measures to confirm the validity of the SUTVA—i.e., the nonexistence of bias associated with secondary effects of the treatment in question—as well as those to detect and correct any such bias. Thus, I developed and applied certain measures to re-evaluate the damage caused by nuclear rumors, and managed to obtain presumably valid results in December 2017.* I would like to take this opportunity to apologize for the trouble I have caused to those parties and individual concerned during this period of time.

Organization-wide systematic development of capabilities to detect pseudo-scientific lies

I have provided an overview of the problem of pseudo-scientific lies in policy evaluation. However, there are limitations for what an individual government policymaker can do with personal efforts to address the problem.

Seen from a different angle, the analysis and measures explained above can be considered quality control activities for policy programs, a special kind of service provided by the government. Thus, it is extremely important for government agencies to make organization-wide efforts to implement these activities, as is the case for quality assurance and quality control activities undertaken by manufacturers. Government agencies should implement the following measures at the forefront of policymaking.

(Development of practical training and internal education programs to teach econometrics)

The first and foremost item to be done in order not to be fooled by pseudo-scientific lies is to equip government policymakers with the necessary knowledge.

Although many government agencies have training or other programs designed to educate employees on policy evaluation, they should change the nature of the program, shifting from classroom-style lecturing on the generalities to one that is more practical and focused on blind spots and points requiring attention in policy evaluation.

Almost all government agencies have their own research institutes, and many national universities offer public policy courses. Government agencies could request such research organizations to sort out and examine problematic policy evaluation cases, have them undertake such tasks as preparing and enhancing relevant materials and developing necessary human resources, and thereby increase the number of easily accessible policy evaluation experts. This is a win-win scenario, which would bring benefits to both government agencies and their affiliated research organizations over a medium- to long-term horizon.

(Reference to relevant research findings and offering of practical consultations at universities and research institutes)

Almost all of the knowledge I have shared in this article has been learned with much effort from actual cases of problematic policy evaluation, those that had been brought to me by officials at the Ministry of Economy, Trade and Industry and other government agencies. They intuitively found something wrong with the analysis and evaluation results and came to me seeking advice.

Unfortunately, it is impossible for government-affiliated research organization to provide consultation on each and every one of problematic cases. However, in an attempt to address frequently observed problems, I have recently begun making available a series of relevant explanatory materials, which are based on my lecture at the University of Tokyo's Graduate School of Public Policy (GraSPP) and provide brief explanations on the collection and analysis of failure cases of policy evaluation and the basic techniques applied. If you are interested in, please visit my page at the RIETI website:

https://www.rieti.go.jp/users/kainou-kazunari/index_en.html

I also intend to continue to respond, to the best of my modest ability, to requests asking me to conduct a third-party review or appraisal of policy evaluations

(Fostering an organizational culture that values fair and meticulous examination and courageous withdrawal)

For most government agencies, securing budgets, creating new policy programs, and enhancing existing ones are a mission of paramount importance for maintaining and developing their respective organizations. However, such policy programs must have been endorsed by the government's policy guidelines and actual demand for government services and proved to be rational through policy evaluation.

If evaluation results for a policy program that has already incurred costs for research, etc. turn out to be negative, the relevant government agency may demand that defects in analysis and evaluation be corrected or examined. However, if this does not result in the reversal of the initial evaluation results, the government agency should get the message and make profound improvements to the policy program in question or work toward a courageous withdrawal from the program. Those who made such a sober decision and their senior officers who approved the decision should be appreciated both within and outside the organization.

A significant portion of fiscal expenditures is being financed by borrowing from future generations in Japan. In the light of this reality, both postponing problems by shelving inconvenient policy evaluation results and forcing analysts and evaluators to conduct evaluations in an arbitrary manner are tantamount to manipulating the quality of special services called policy programs and definitely constitute an act of treachery against the country and future generations.

The original text in Japanese was posted on January 17, 2018.