Machine learning algorithms are increasingly being used in decision making. Web companies, car-sharing services, and courts rely on algorithms to supply content, set prices, and estimate recidivism rates. This column introduces a method for predicting counterfactual performance of new algorithms using data from older algorithms as a natural experiment. When applied to a fashion e-commerce service, the method increases the click through rate and improved the recommendations algorithm.

Decision making using prediction by machine learning (ML) algorithms is becoming increasingly widespread (Athey and Imbens 2017, Mullainathan and Spiess 2017). For instance, Amazon, Facebook, Google, Microsoft, Netflix, and other web companies apply machine learning to problems such as personalising ads and content (movies, music, news, etc.), determining prices, and ranking search results. The prices set by car sharing services such as Uber, Lyft, and DiDi are also based on proprietary algorithms based on information about supply and demand at each point in time and location (Cohen et al. 2016).

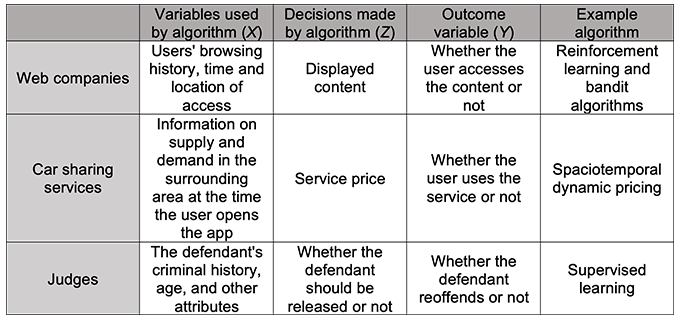

The use of ML algorithms in decision making is expanding beyond the digital world into high stakes real-world settings such as court and bail decisions. COMPAS, a software developed by Northpointe (now Equivant), uses supervised machine learning to predict the defendant's recidivism rates. The resulting risk prediction is already being put into practice by many judges in the US (Kleinberg et al. 2017). Other emergent areas are personnel recruitment systems, predictive policing, and medical diagnostics (Hoffman et al. 2017, Horton 2017, Shapiro 2017, Rajkomar et al. 2019). Table 1 summarises some of these examples.

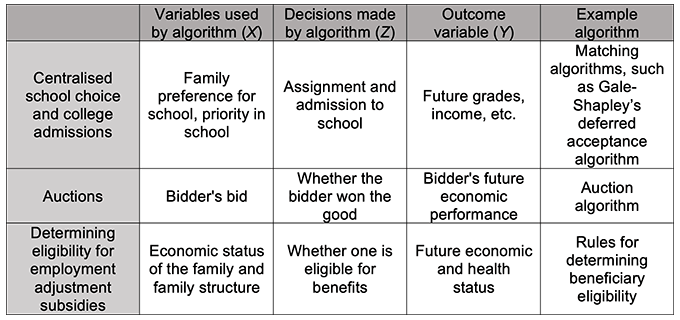

Non-ML algorithms are also popular in public policy. For instance, matching and assignment algorithms are used in school choice and admissions systems (Abdulkadiroğlu et al. 2017, 2020, Narita 2020a), entry-level labour markets, and organ transplant markets around the world. Auction algorithms are widespread in many other settings, from government bond markets and wholesale markets to online advertising and second-hand goods markets. In other public policy areas, algorithmic rules are used to determine eligibility for benefits (Currie and Gruber 1996, Mahoney 2015). Such market design and policy eligibility rules are also algorithmic decision making (Table 2).

An important part of algorithmic decision making is to predict the performance of new decision-making algorithms that have not yet been used. With accurate performance prediction, the algorithm can be iteratively improved. A method of performance prediction that immediately comes to mind would be a randomised experiment (RCT, A/B test), in which an old algorithm and a new algorithm are randomly assigned to users and compared. However, RCTs are time-consuming, expensive, and come with potential ethical issues (Narita 2020b). Is there a way to predict performance using only the data that is naturally generated by past algorithms without resorting to RCTs?

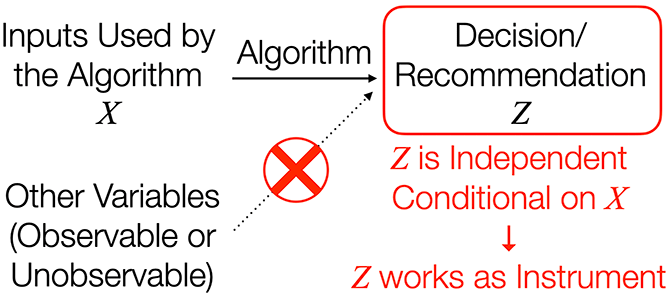

We propose a method that uses the data accumulated by past algorithms to predict the counterfactual performance of a new algorithm. As detailed in Narita and Yata (2021), this method is based on the following observation (Figure 1): when an algorithm is used to make a decision, the algorithm-generated data will almost always contain natural experiments (quasi-randomly assigned instruments) in which the decision is made (quasi-)randomly conditional on algorithm inputs. For instance, many probabilistic reinforcement learning and bandit algorithms are almost RCTs themselves as they randomise the decision (Li et al. 2010, Precup 2000).

As a less obvious example, consider an algorithm that makes a selection based on whether or not some variable predicted by supervised learning exceeds some criterion value. In this case, although the variable is almost the same near the criterion value, different decisions are made almost by chance, depending on whether the criterion value happens to be cleared or not. This is a regression discontinuity-style local natural experiment (Bundorf et al. 2019, Cogwill 2018).

These natural experiments can be used for a variety of purposes. They can be used to measure the treatment effects of different decisions; or they can be used to predict how a new decision-making algorithm is likely to perform when introduced. We formalise this observation for a general algorithm and develop a method to improve the algorithm using only the data naturally generated by the algorithm.

Potential applications of this method range widely from business to policy. As a concrete application, we deploy our method to improve the design of the fashion e-commerce service ZOZOTOWN. ZOZOTOWN is the largest fashion e-commerce platform in Japan, with an annual gross merchandise value of over $3 billion. The founder of this company is famous for purchasing SpaceX's first civilian ticket to the moon for about $700 million.

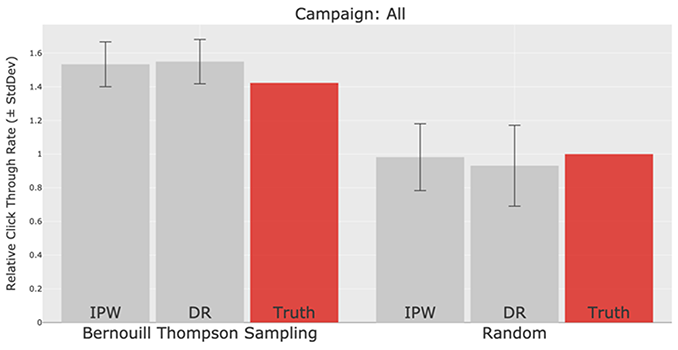

In this application, which we detail in Saito et al. (2020), we increased the click through rate of fashion recommendations made by ZOZOTOWN by about 40%. We also succeeded in finding ways to further improve the recommendation algorithm (Figure 2). The recommendation data and the code used in this implementation are open source and available on GitHub.

Authors' note: The main research on which this column is based (Narita et al. 2020c) first appeared as a Discussion Paper of the Research Institute of Economy, Trade and Industry (RIETI) of Japan.

This article first appeared on www.VoxEU.org on April 28 , 2021. Reproduced with permission.